Kai-Fu Lee, head of the Chinese AI company 01.ai, recently highlighted a significant achievement in AI development despite facing substantial regulatory and resource limitations. Lee pointed out that his company managed to train an advanced AI model, which ranks sixth in performance according to LMSIS at UC Berkeley, with only 2,000 GPUs and a budget of $3 million. This is a stark contrast to the much larger budgets reportedly used by companies like OpenAI, with GPT-4’s training budget estimated between $80 and $100 million and GPT-5’s rumored at around a billion dollars.

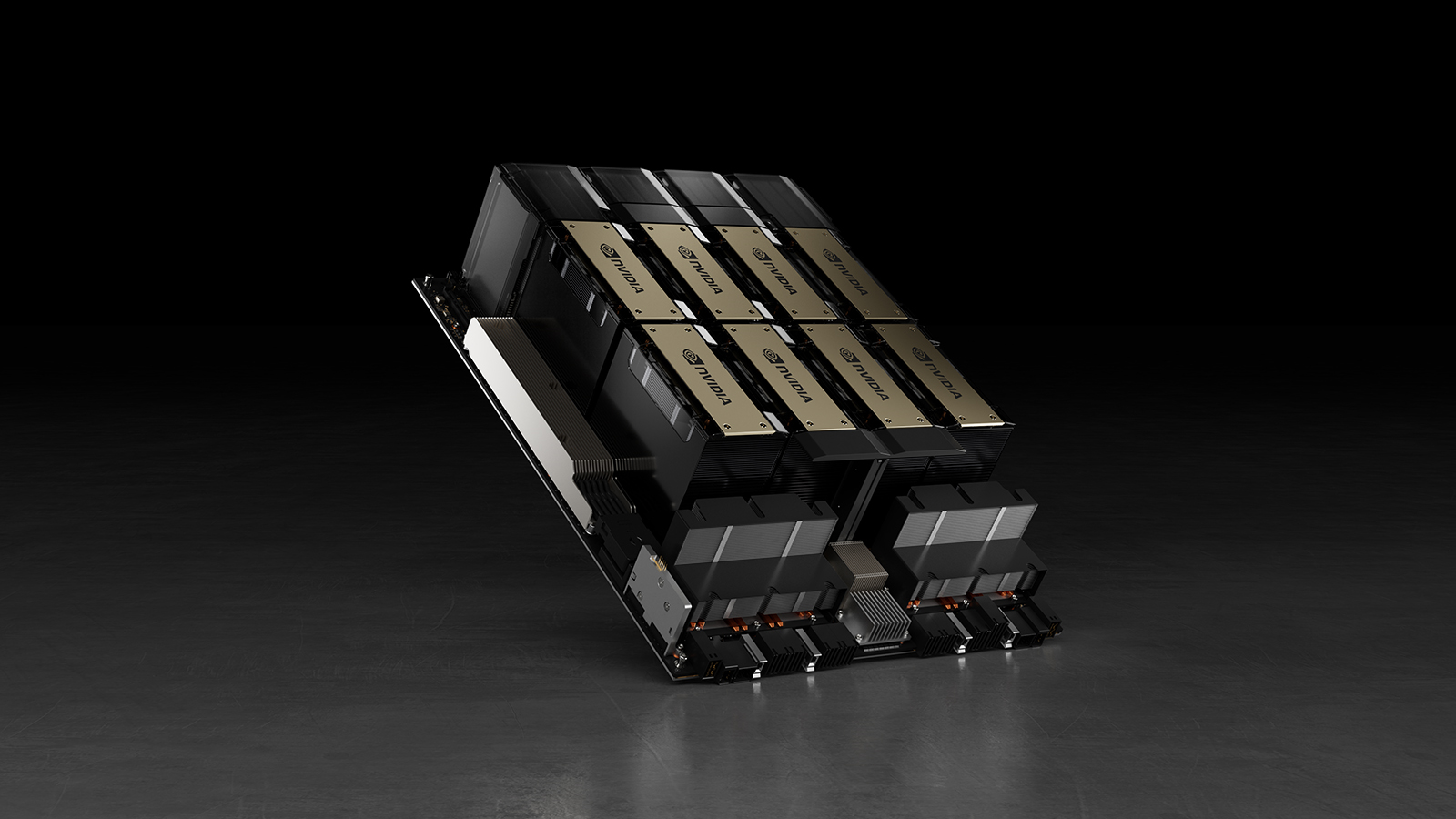

The constraints imposed by U.S. export regulations limit Chinese companies’ access to state-of-the-art GPUs, which are crucial for training powerful AI models. Despite these challenges, 01.ai has not only succeeded in training its model but also in optimizing its inference processes to reduce costs significantly. The company’s Yi-Lightning model benefits from innovative engineering adjustments that convert computational demands into memory-oriented tasks, alongside a multi-layer caching system and a specialized inference engine. These modifications have resulted in inference costs of just 10 cents per million tokens—about 1/30th of the cost incurred by comparable models.

These accomplishments underscore a broader narrative in the tech industry: significant advancements can be achieved with limited resources through targeted optimizations and efficient engineering. This is especially pertinent for AI companies in regions with restricted access to cutting-edge hardware, prompting them to develop alternative strategies to stay competitive.

For further details on Kai-Fu Lee’s insights and the innovative approaches taken by 01.ai, read the full story here.