As we navigate the demands of more complex datasets in AI training, GPUs are often limited by their finite amount of built-in high-bandwidth memory (HBM). A promising development comes from Panmnesia, a company backed by South Korea’s KAIST research institute, which has developed a new tech that significantly enhances GPU memory capabilities through the use of PCIe-attached memory or even SSDs.

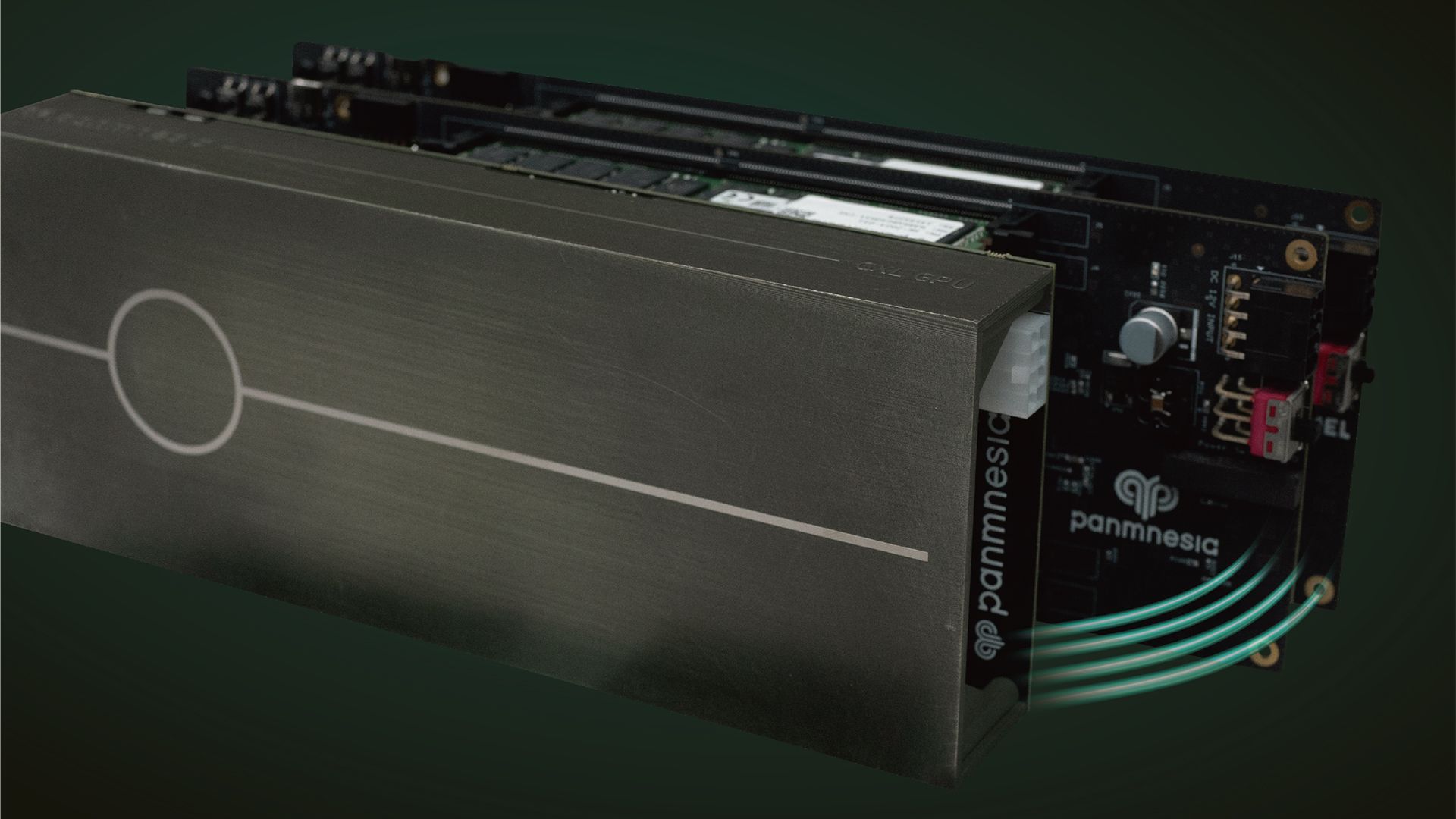

Traditionally, GPU memory expansion has been constrained to the memory that can be directly integrated on the GPU itself. However, Panmnesia’s low-latency CXL IP technology allows for expanding GPU memory by connecting additional memory units via the PCIe bus. This innovation not only broadens the memory pool beyond the built-in limits of GPUs but also leverages SSDs for further expansion.

The challenges in integrating CXL for GPU memory expansion were substantial, primarily due to the absence of a CXL logic fabric in GPU architectures that could support DRAM or SSD endpoints. Panmnesia’s solution was to create a CXL 3.1-compliant root complex equipped with multiple root ports and a host bridge with a host-managed device memory decoder. This setup fools the GPU’s memory subsystem into treating PCIe-connected memory as system memory, effectively enhancing the memory capacity accessible to the GPU.

The results from testing this technology are impressive. Panmnesia’s solution, referred to as CXL-Opt, demonstrated a round-trip latency in the double-digit nanoseconds—a significant improvement over earlier prototypes developed by other industry giants. In practical terms, this means faster data processing times and reduced latency in memory operations. Tests also showed that execution times for operations using Panmnesia’s CXL-Opt were significantly shorter compared to those using unified virtual memory systems