In an exploration of artificial intelligence capabilities, a compelling strategy emerges for elevating smaller opensource language models to match the reasoning prowess of OpenAI’s O1 model, renowned for its PhD-level intelligence. This strategy, detailed by Harish SG, a cybersecurity and AI security engineer, incorporates a dynamic prompting paradigm that includes Dynamic Chain of Thoughts (CoT), reflection, and verbal reinforcement learning.

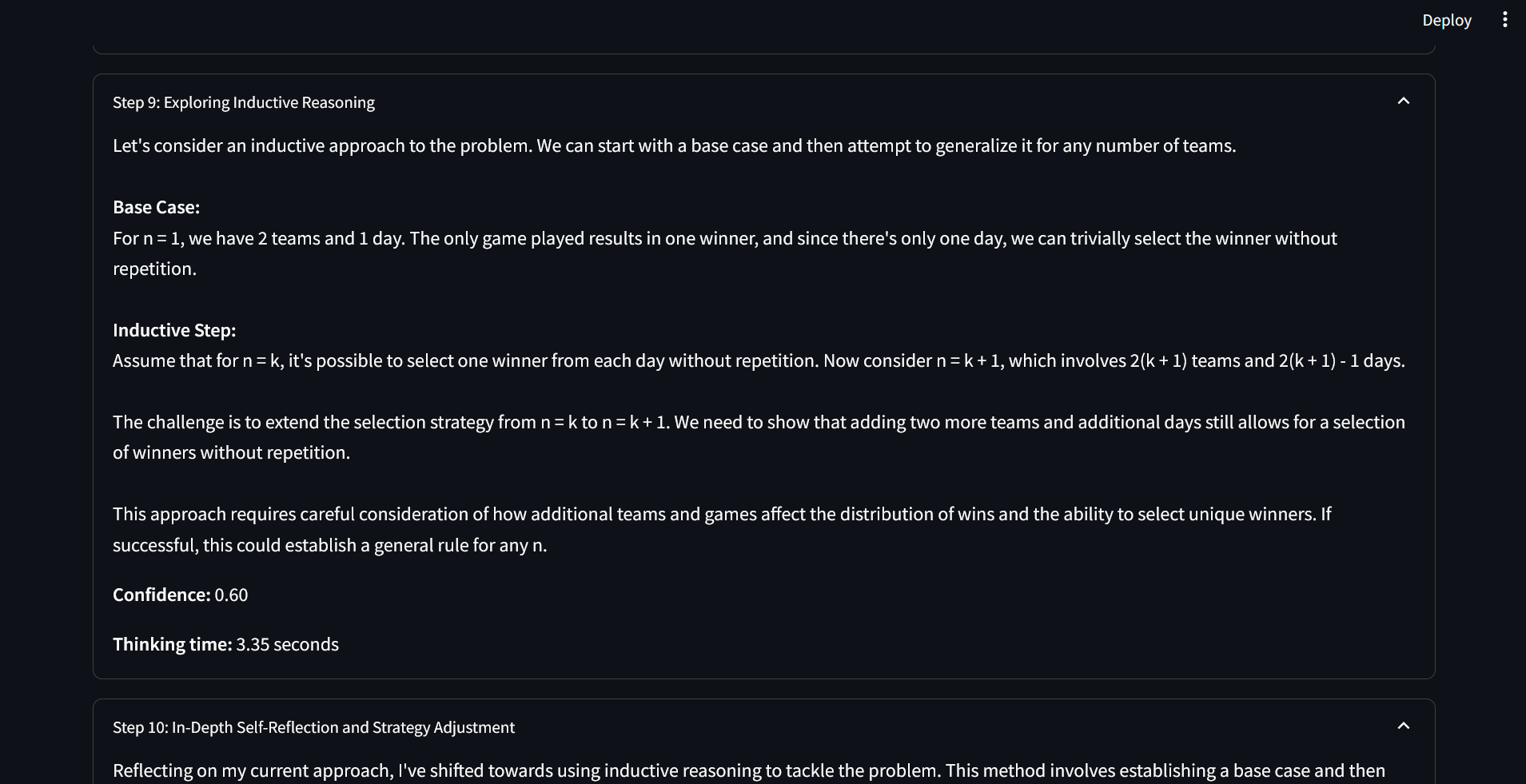

Harish’s method begins with the use of

The application of a reward system through

Harish applied this innovative paradigm to Claude Sonnet 3.5, using challenging datasets from the JEE Advanced and UPSC prelims, as well as mathematical problems from the Putnam Competition. The results were promising, showing Claude Sonnet’s enhanced performance with the new prompting techniques, sometimes even surpassing the o1 model in specific tasks.

This approach underscores the potential for smaller, more accessible AI models to achieve high-level reasoning capabilities through structured, reflective, and adaptive prompting paradigms.

For those interested in the detailed methodology and further insights into this research, the full article is available here.