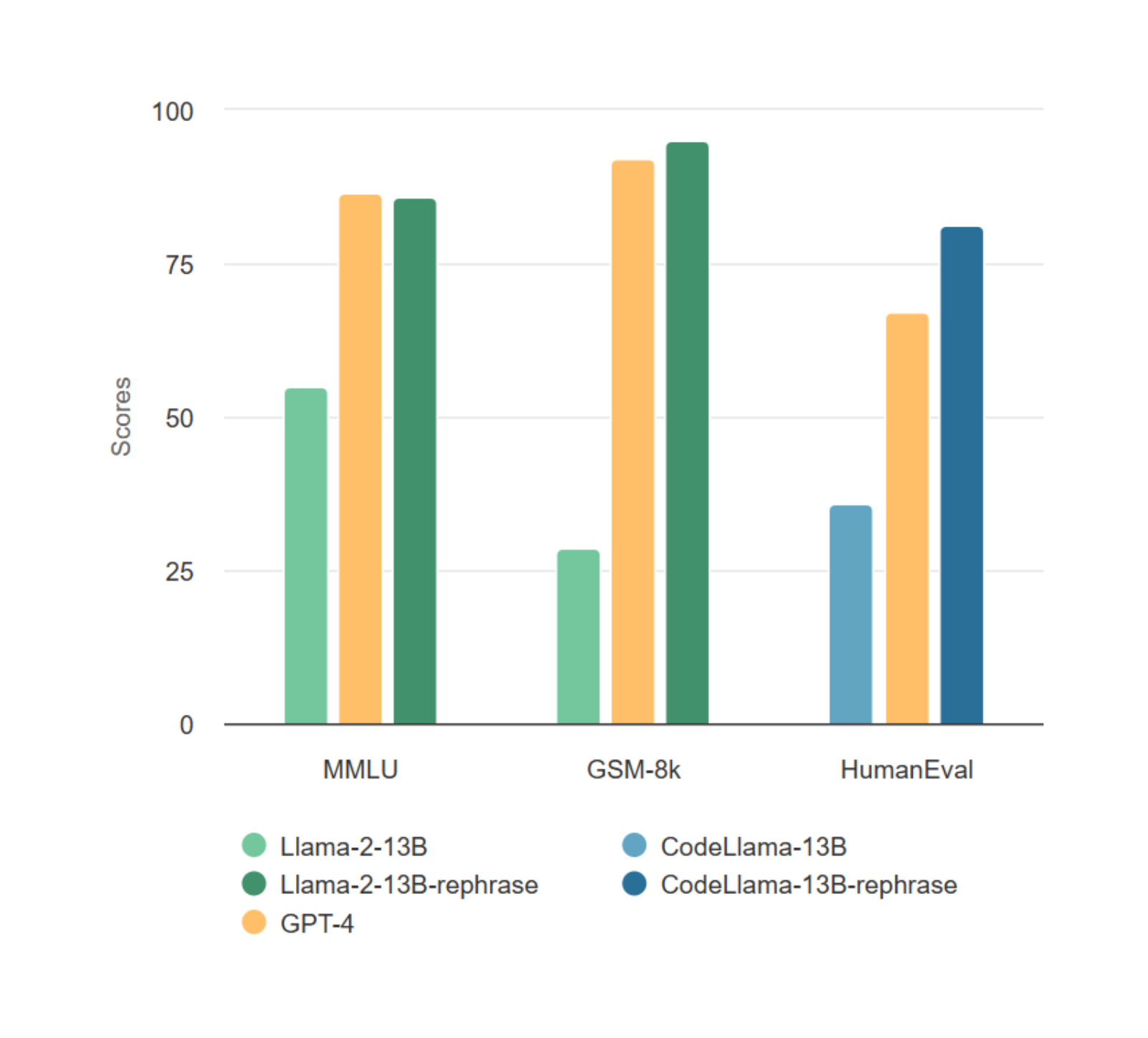

Researchers have developed a new method, the LLM Decontaminator, to detect and address contamination in language model training sets. The team found that simple variations of test data, such as rephrasing or translation, can bypass existing detection methods. The LLM Decontaminator uses advanced language models to identify and remove these rephrased samples, significantly improving the detection of contamination. The tool is now open-sourced for community use.

Read more…