Large Language Models (LLMs) like GPT-4 have become central to many applications due to their ability to generate human-like text responses. However, the cost associated with deploying the most capable LLMs for every task can be prohibitively high. A promising solution to this problem is the intelligent routing of queries to different models based on their complexity, which could potentially maintain high quality while reducing costs.

This concept is explored in depth with the introduction of RouteLLM, an open-source framework designed to optimize the routing of queries across various models. The framework employs a system that dynamically decides which model to use for a given query—either a more capable but expensive model or a less capable but cheaper one. By doing so, it aims to strike a balance between cost and performance.

The performance of RouteLLM is impressive. By using data from Chatbot Arena, the team behind RouteLLM trained several routers that significantly cut costs while still delivering near top-tier model performance. For instance, using a matrix factorization approach, they managed to reduce the use of GPT-4 by approximately 48%, achieving 95% of its performance at a much lower cost. Even more striking, after enhancing their dataset with data judged by other LLMs, the need for GPT-4 dropped to just 14% while still maintaining 95% performance, showcasing a 75% cost reduction compared to a baseline router.

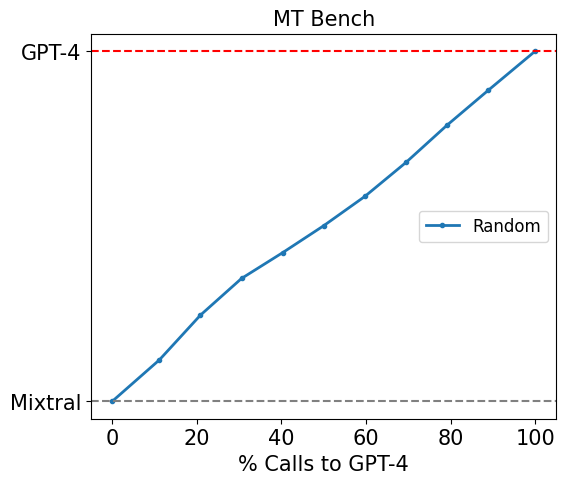

Moreover, RouteLLM is not just theory; its practical application has been demonstrated across different benchmarks like MT Bench, MMLU, and GSM8K. Its versatility is further shown in its ability to generalize well to new model pairs without retraining, such as routing between Claude 3 Opus and Llama 3 8B, which are not part of the training dataset.

Beyond the technical prowess, RouteLLM stands out for its accessibility. The framework, along with all the associated routers and datasets, has been made available on platforms like GitHub and HuggingFace, encouraging further innovation and application in the field.

For those interested in exploring this technology, a demo is available where users can test out the routing system with messages and see which model they are directed to. This hands-on approach helps in understanding how sophisticated routing algorithms can efficiently utilize resources while maintaining high response quality.

For more detailed insights and technical specifics, the team has thoroughly documented their research, methodologies, and results in a paper available on arXiv. Those keen on incorporating or building upon RouteLLM can access all the resources publicly, fostering a collaborative and open approach to research and development in AI.

To read more about RouteLLM and explore their resources, visit their blog post.